New task

Subsumes phrase grounding and open-vocabulary detection

Manually curated

Manually curated high-quality dataset of more than 2.6k examples.

Hard negatives

Examples crafted to be hard for existing methods.

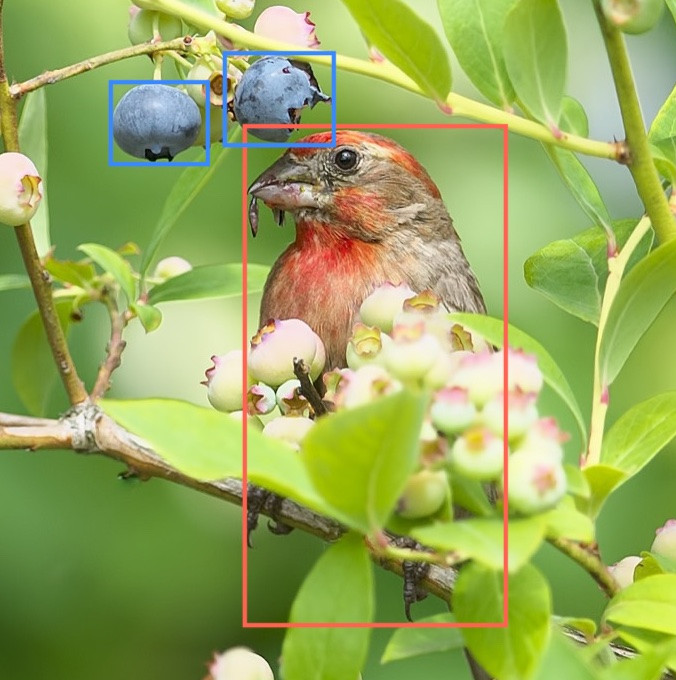

Current state of the art visual question answering (VQA) systems display impressive performance on popular benchmarks, however often fail when evaluated on challenging images such as those shown above. We propose a novel task titled 'Contextual Phrase Detection' which evaluates models' fine-grained vision and language understanding capabilities along with a human-labelled dataset (TRICD) that enables evaluation on this novel task. In addition to assessing whether the objects are visible in the scene, models are required to produce bounding boxes to localize them.

Examples

People

Aishwarya Kamath

New York University

Sara Price

New York University

Jonas Pfeiffer

New York University

Yann LeCun

New York University

Nicolas Carion

New York University