- What: A new architecture for Vision and Language tasks + a new pre-training strategy that benefits both image level and region level tasks.

- How: We add cross-modality attention blocks into the image and text backbone & split pre-training into low and high resolution stages.

- Outcome: State-of-the art results on a captioning, VQA, NLVR2, and more + efficient use of expensive fine-grained data, surpassing phrase grounding performance of models using 25x more box-annotated data!

Abstract

Vision-language (VL) pre-training has recently received considerable attention. However, most existing end-to-end pre-training approaches either only aim to tackle VL tasks such as image-text retrieval, visual question answering (VQA) and image captioning that test high-level understanding of images, or only target region-level understanding for tasks such as phrase grounding and object detection. We present FIBER (Fusion-In-the-Backbone-based transformER), a new VL model architecture that can seamlessly handle both these types of tasks. Instead of having dedicated transformer layers for fusion after the uni-modal backbones, FIBER pushes multimodal fusion deep into the model by inserting cross-attention into the image and text backbones, bringing gains in terms of memory and performance. In addition, unlike previous work that is either only pre-trained on image-text data or on fine-grained data with box-level annotations, we present a two-stage pre-training strategy that uses both these kinds of data efficiently: (i) coarse-grained pre-training based on image-text data; followed by (ii) fine-grained pre-training based on image-text-box data. We conduct comprehensive experiments on a wide range of VL tasks, ranging from VQA, image captioning, and retrieval, to phrase grounding, referring expression comprehension, and object detection. Using deep multimodal fusion coupled with the two-stage pre-training, FIBER provides consistent performance improvements over strong baselines across all tasks, often outperforming methods using magnitudes more data.

Main Results

We evaluate our coarse-grained and fine-grained pretrained models on a variety of tasks, including visual question asnwering, visual reasoning, image-text retrieval, image captioning, phrase grounding, and object detection tasks and demonstrate state-of-the-art performance on many of them. FIBER is pretrained with 4M images during coarse-grained pretraining and with 860k images during fine-trained pretraining.

| Task | VQAv2 | NLVR2 | F30k Retrieval | COCO Retrieval | COCO Captioning |

|---|---|---|---|---|---|

| Split | test-std | test-P | test | Karpathy test | Karpathy test |

| Metric | VQA Score | Acc. | IR@1/TR@1 | IR@1/TR@1 | CIDEr |

| FIBER-Base | 78.46 | 85.52 | 81.44/92.90 (ITC) 84.10/95.10 (ITM) | 58.01/75.38 (ITC) 59.03/75.14 (ITM) | 144.4 |

| Task | F30k Grounding | RefCOCO | RefCOCO+ | RefCOCOg |

|---|---|---|---|---|

| Split | test | val/testA/testB | val/testA/testB | val/test |

| Metric | R@1/R@5/R@10 | Acc. | Acc. | Acc. |

| FIBER-Base | 87.4/96.4/97.6 | 90.68/92.59/87.26 | 85.74/90.13/79.38 | 87.11/87.32 |

| Task | COCO Detection | LVIS | ODinW |

|---|---|---|---|

| Split | Val 2017 | MiniVal | 13 Datasets |

| Metric | Zero-shot/Fine-tune AP | Zero-shot/Fine-tune AP | Avg. Zero-shot/Fine-tune AP |

| FIBER-Base | 49.3/58.4 | 35.8/56.9 | 47.0/65.9 |

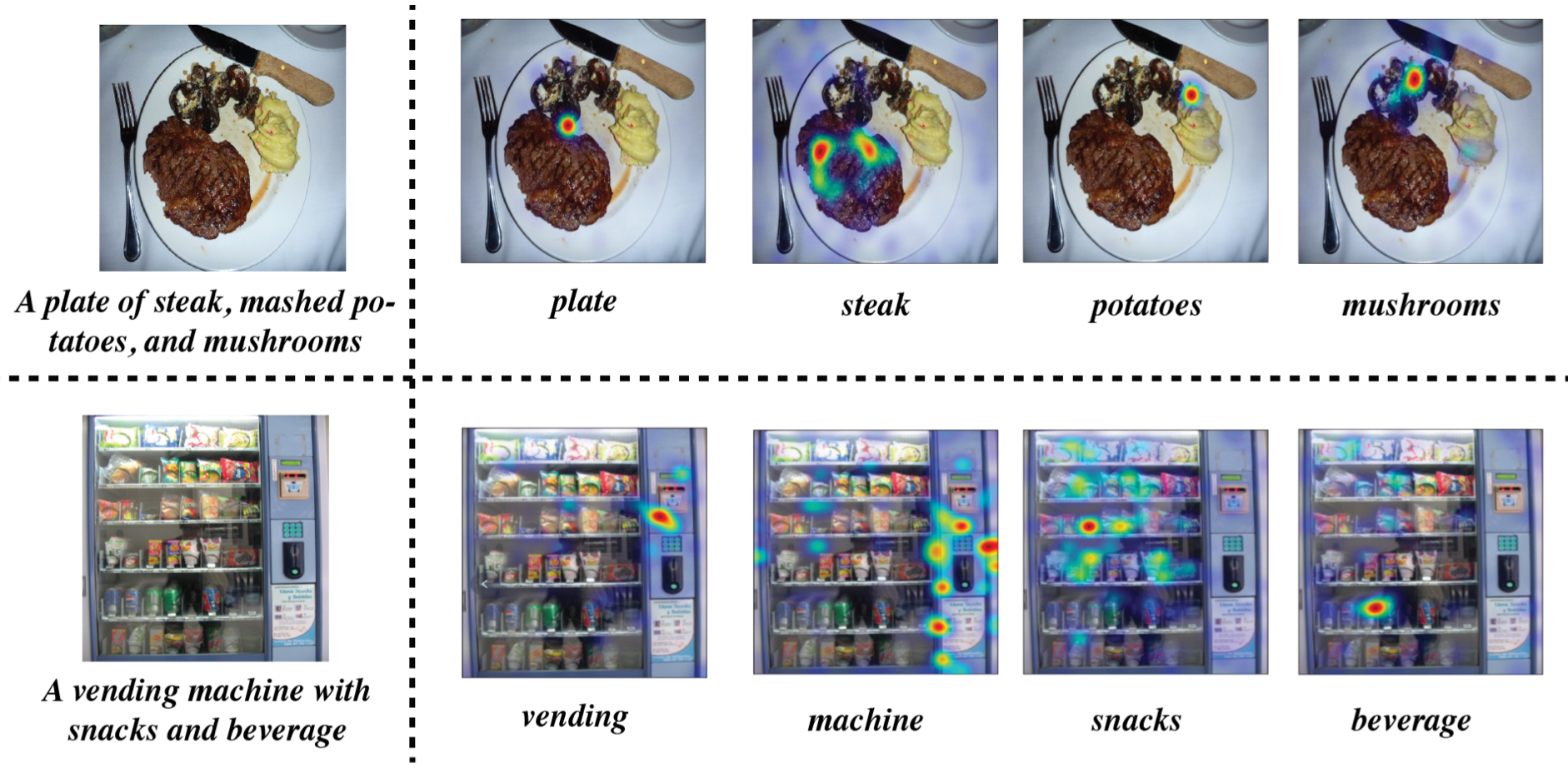

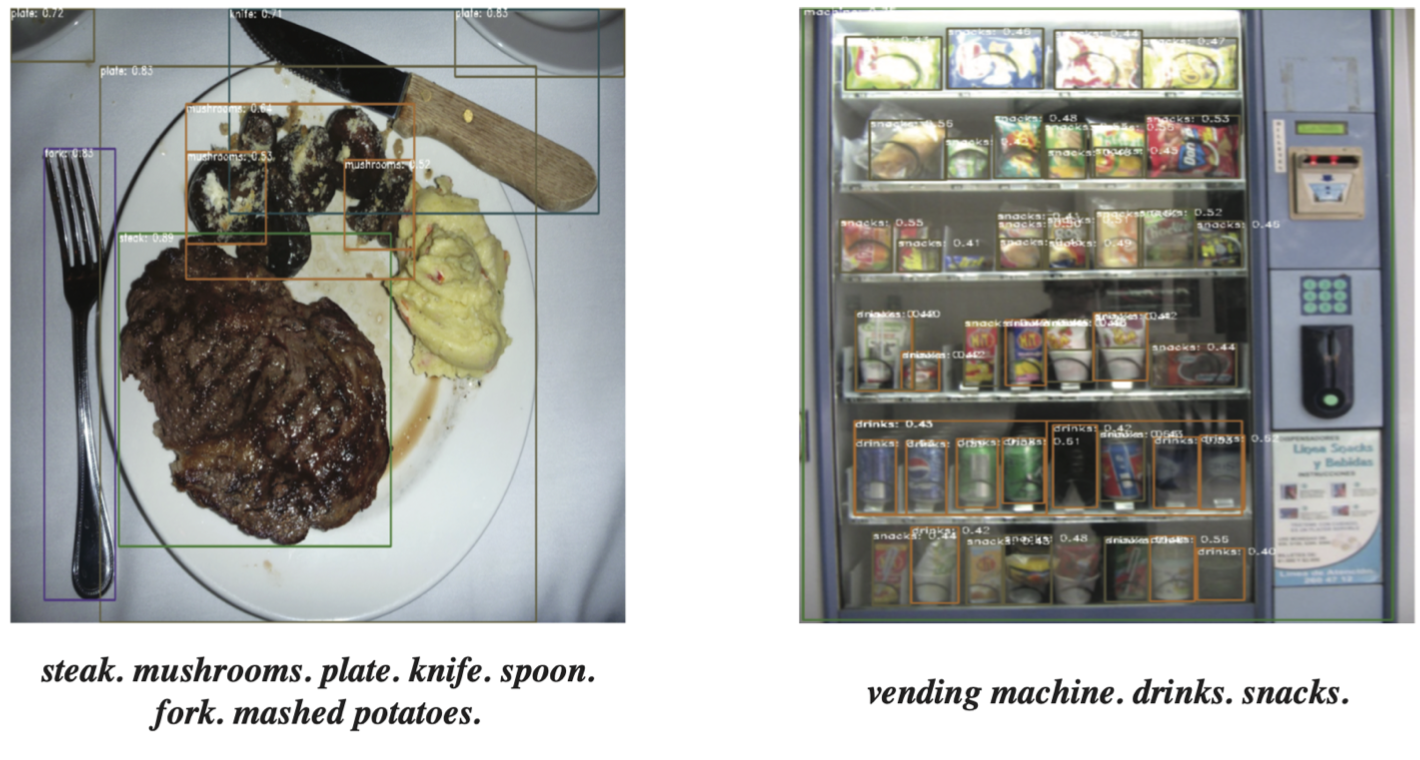

Visualization of the pre-trained models

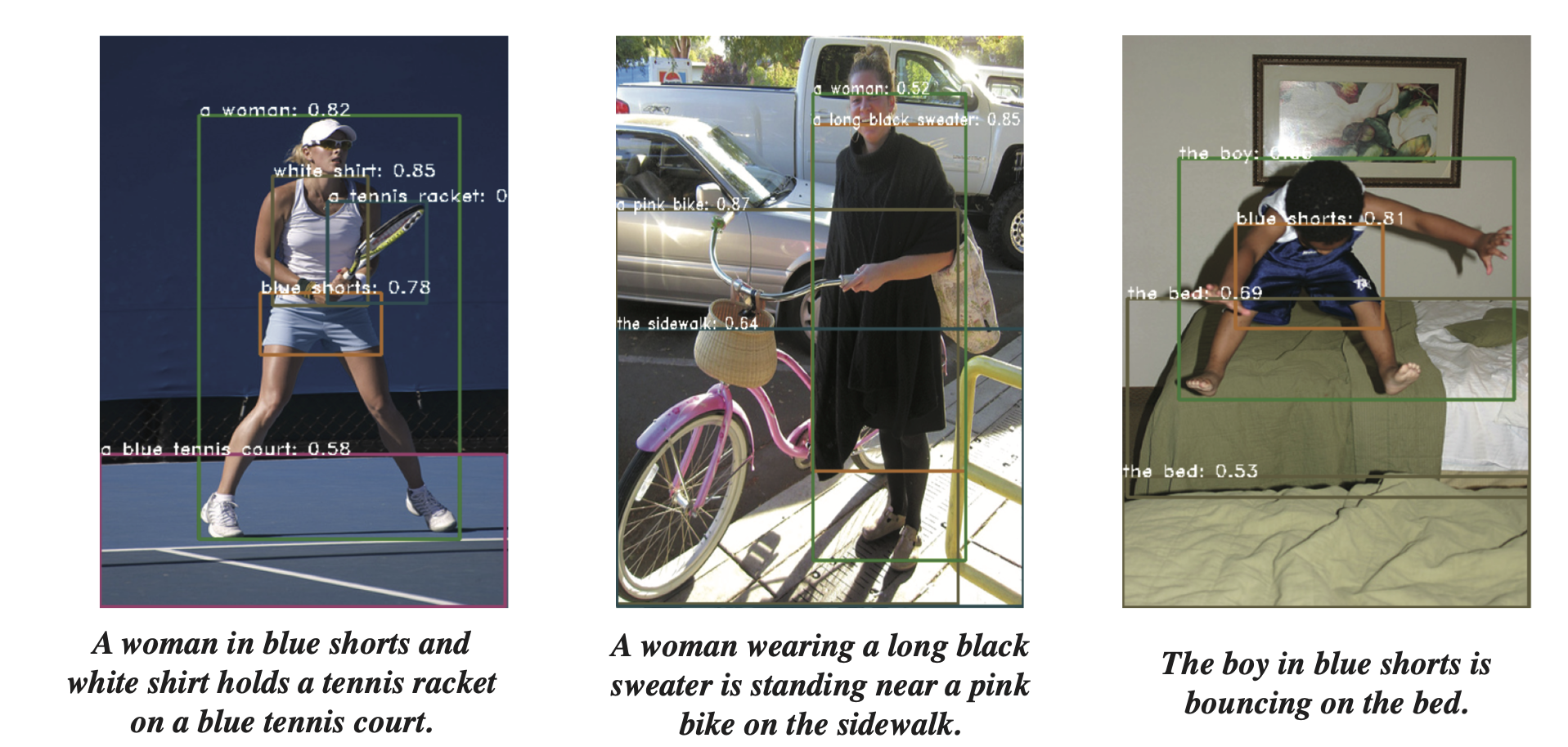

From the examples below, we can see that our coarse-grained pretrained model can learn to perform phrase grounding implicitly despite given only image-caption pairs, and fine-grained pretraining can further improve the model grounding performance and allows us to localize objects more accurately.

Some results on Flickr30k Entities

Referring Expression Comprehension